Natalie Cooper is Senior Manager and Robert Lamm is an Independent Senior Advisor, at the Center for Board Effectiveness, Deloitte LLP; and Randi Val Morrison is Vice President, Reporting & Member Support at the Society for Corporate Governance. This post is based on their Deloitte and Society for Corporate Governance publication.

Artificial intelligence (AI), the use of technology to execute or simulate processes that would otherwise require human intelligence, is not new. But rapidly expanding technologies and evolving consumer digital preferences and expectations have generated intense interest in leveraging AI to help achieve efficiencies, increase competitive advantage, and enhance engagement with customers/ clients and other stakeholders.

As companies continue to explore and invest in AI, they also are tasked with considering numerous business implications, such as ethics, compliance, and regulatory processes; risks (e.g., operational and reputational) and risk appetite; equity; governance; and the role of the board. This post presents findings from a survey of members of the Society for Corporate Governance that focused on aspects of AI, including where in the organization AI resides, use policies/framework, risk mitigation measures, education and training, and board oversight.

Findings

Respondents, primarily corporate secretaries, in-house counsel, and other in-house governance professionals, represent 97 public companies of varying sizes and industries. [1] The findings pertain to these companies, and, where applicable, commentary has been included to highlight differences among respondent demographics. The actual number of responses for each question is provided.

Access results by company size and type.

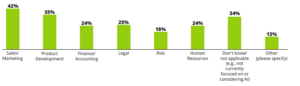

In which areas is your company currently focused on or considering artificial intelligence (AI) usage, strategy, impact (e.g., disruption, competitive advantage, risk), or other reason? [Select all that apply] (79 responses)

The most common responses for large-caps were Sales/Marketing (55%), Product Development (48%), and Legal (42%). For mid-caps: Don’t know/not applicable (48%), Sales/Marketing (33%), and Product Development (30%). Other areas specified by some respondents included operations; strategic opportunities; customer service; manufacturing predictive maintenance; regulatory/surveillance; compliance; and back-office functions.

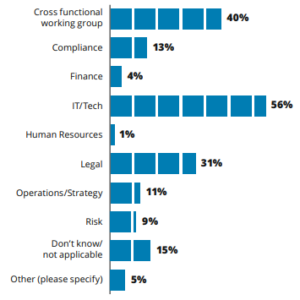

Which functional area or department in your company has primary responsibility for AI matters? [Select all that apply] (75 responses)

Other areas specified by some respondents included “evolving in real time” and “each business/function is defining its overall AI strategy and then reviewing it with the chief digital officer.”

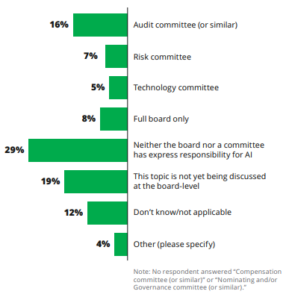

Where does primary oversight for AI lie within your company’s board? (75 responses)

The most frequently cited response, that neither the board nor a board committee has express responsibility for AI, was reported by 25% of large caps and 38% of mid-caps. This was followed by those reporting that AI is not yet being discussed at the board level by 19% and 22% of large- and mid-caps, respectively.

For those that have delegated oversight of AI to the full board or a committee, responses by market caps were:

- Large-caps—19% audit committee, 13% technology committee, and 13% full board

- Mid-caps—11% audit committee, 5% risk committee, and 5% full board

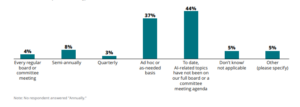

Describe the frequency of AI-related topics on the meeting agendas for the full board or board committee(s) with oversight responsibility. [Select all that apply] (73 responses)

Most common responses:

- Ad hoc or as-needed basis, reported by 52% of large-caps and 31% of mid-caps.

- To date, AI-related topics have not been on our full board or a committee meeting agenda, reported by 35% of large-caps and 53% of mid-caps.

Supplemental comments provided by some respondents were:

- “AI is discussed in connection with particular applications (e.g., in engaging with consumers).”

- “Frequency is ad hoc but is increasing in interest and relating to multiple presentations/discussions.”

- “AI has been discussed as part of larger cybersecurity discussions.”

- “AI was covered by the Technology Committee in Q1 2023.”

Does your company permit the use of AI tools by employees? (69 responses)

Nearly half (48%) of all public company respondents reported that use of AI tools is not expressly permitted or prohibited; however, this varied significantly across market caps (25% of large-caps and 63% of mid-caps). Further, 14% of large-caps reported they are currently considering whether to permit use by their employees compared to 6% of mid-caps.

For companies that allow use of AI tools by employees, 36% of large-caps and 20% of mid-caps allow use for specific purposes compared to 14% of large-caps and 3% of mid-caps that permit use for any purpose.

Some respondents provided comments to further describe or specify how their companies permit use:

- “At this point, use of AI tools is limited to marketing and product development.”

- “AI can only be used within the bounds of our existing policies regarding confidential information, etc.”

- “Use of AI tools must have a legitimate business purpose.”

- “Employees have broad authority to use services for assistance addressing non-confidential matters, like emails, presentations, etc.”

- “HR, legal, finance, product and technology, and sales functions can use AI tools, all with guardrails and policies.”

- “We do not have a specific policy for AI, but we have a policy that only company-approved applications may be used for business purposes.”

- “AI tools may be used but with restrictions on the inputting of company confidential data.”

- “Company-licensed tools are allowed for specific purposes; otherwise, generative AI is allowed for non-confidential use within established

internal guardrails.” - “We have not blocked it for use by employees but have prohibited confidential information from being entered into AI tools.”

- “All code of conduct provisions apply to new tools and channels.”

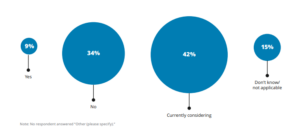

Does your company have an AI use framework, AI policy or policies, or AI code of conduct? (70 responses)

The greatest variation across responses by market cap:

- 17% of large-caps and 46% of mid-caps reported no.

- 48% of large-caps and 23% of mid-caps reported currently considering.

One respondent specified: “We just published stand-alone guidance, focusing on the protection of company confidential data.”

Has your company revised corporate policies, such as privacy, cyber, risk management, records retention, etc., to address the use of AI? (67 responses)

The greatest variation across responses by market cap:

- 18% of large-caps and 50% of mid-caps reported no.

- 57% of large-caps and 29% of mid-caps reported currently considering.

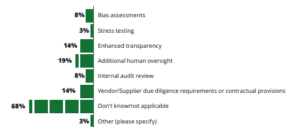

What risk mitigation measures pertaining to AI has your company adopted/implemented? [Select all that apply] (63 responses)

The greatest variation in responses across market caps was related to adoption/implementation of vendor/supplier due diligence requirements or contractual provisions, reported by 8% of large-caps vs. 19% of mid-caps.

Endnotes

1Public company respondent market capitalization as of December 2022: 44% large-cap (which includes mega- and large-cap) (> $10 billion); 47% mid-cap ($700 million to $10 billion); and 8% small-cap (which includes small-, micro-, and nano-cap) (< $700 million). Public company respondent industry breakdown: 33% consumer; 25% financial services; 24% energy, resources, and industrials; 12% technology, media, and telecommunications; and 6% life sciences and health care.

Small-cap and private company findings have been omitted from this report and the accompanying demographics report due to limited respondent population.

Throughout this report, percentages may not total 100 due to rounding and/or a question that allowed respondents to select multiple choices.(go back)

Print

Print